Combatting reverse shell bots with honeypots

What do you do if it's too early to figure out fail2ban and need to stop crude bot attacks?

It was an early friday morning at 8am, a day after we’ve released our release trailer to a long awaited new update of a project I’m developing software for. I was barely awake, when I saw multiple pings on Discord about how our production server is barely holding up. Requests take 5-20 seconds and they have been for a while.

Only a few hours earlier, we’ve had a wonderful launch event for the trailer. Naturally, the website being a bit slow seemed normal then, there have been A LOT more concurrent requests than usual. Hours later though, I wasn’t too sure anymore. In such situations, I of course first need to check if I was responsible for this semi-outage. After all, along with this new desktop client release, I also deployed a server update that deals with some of the new features and adds a fancy new download page.

One quick look back at the commits in our repo did not yield any resolve though. Time to get out good ‘ol ssh, maybe something odd is happening there I thought. If there is one thing I learned, it’s that if ssh logins take too long, something’s up. And slow it was.

First, I tried isolating the issue, was this an overworked server? Was there a suspicious or long running process? Within htop, I only saw the usual few processes. Some mysql connection processes, some php-fpm processes. Although it was odd that the system was above maximum load, hitting swap with 130% CPU usage.

While a bit older (development began 2019 and we haven’t had a big rewrite yet), I do think the software we wrote for our server is pretty performant. So something suspicious must be up, time to hit the access logs!

This is not Wordpress

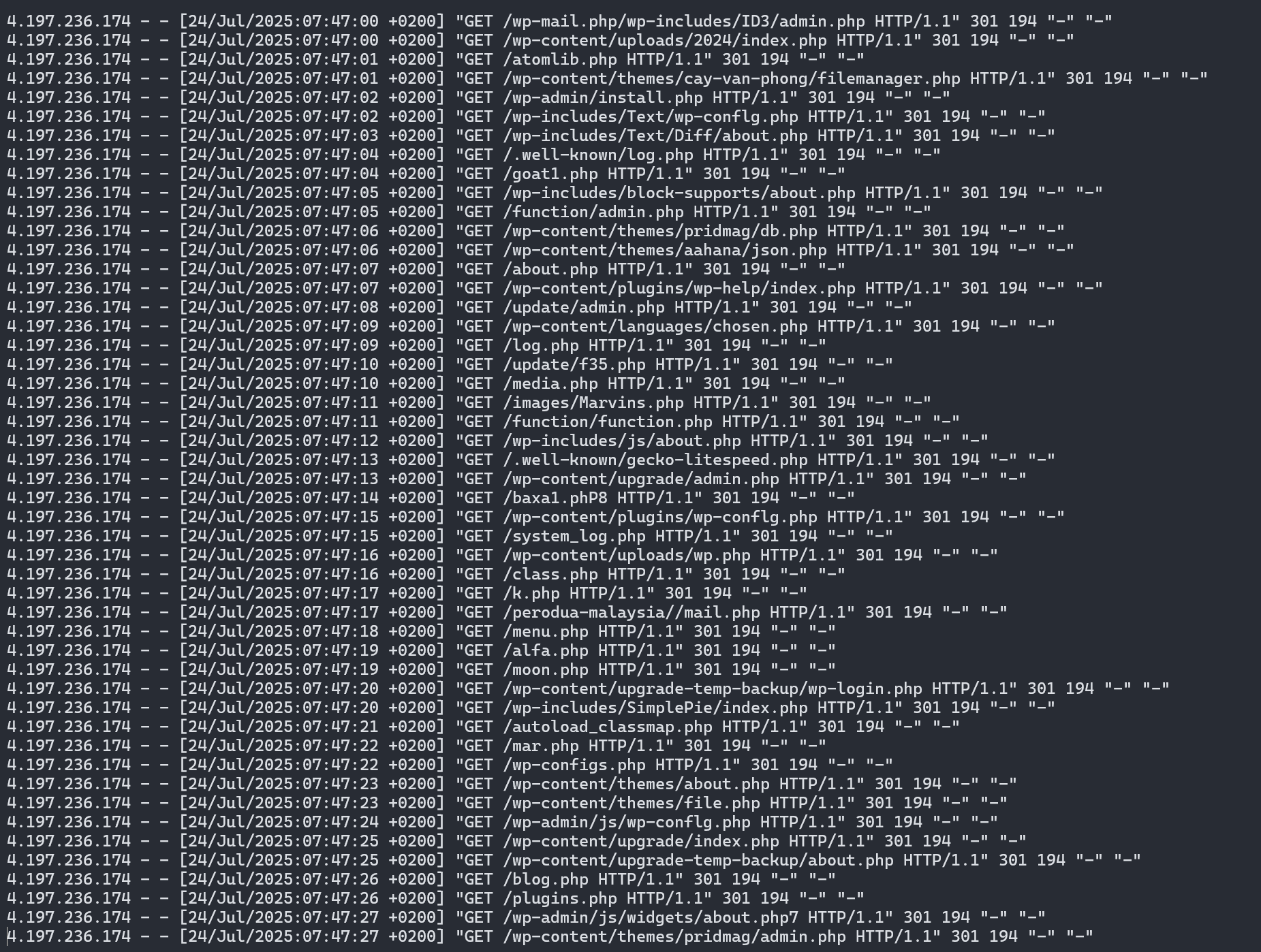

Hundreds, if not thousands of requests were visible in the access logs, from multiple IPs but always grouped with 1 second of delay. All of them trying to access various login/config related files (like .env or .git/) or reverse shell scripts with a heavy focus on Wordpress. For some context: Our service runs on Symfony, it never has and never will host a Wordpress instance (especially not after seeing how many themes and plugins seemingly have a reverse shell hidden in their files).

Some of the request even tried their luck requesting php://input to gain shell access or traverse folders via ../../../../../bin/sh. Now, I might not be a trained dev ops engineer, but I’ve been expanding my horizon as much as I can in the last ~10 years. I always thought that IF I run a dedicated/vServer, I should at least understand it enough to secure it properly. I get loads and loads of spam from taken over Wordpress installations and don’t want to be the next one in the botnet.

So naturally, all of those requests hit a 404. No access was given, no security was breached. But why was the server still down? While multiple IPs are requesting the same not existing files can be somewhat straining, it should’ve not hit as much as it did.

Custom 404’s

Custom 404 pages are awesome! Instead of a white screen with a very authentic Times New Roman looking “Not Found”, you can integrate the error handling in your websites style and direct visitors to a different place that might have some value to them. The thing they try to find is not there, but maybe something else fits your needs.

We also have a custom 404 (and 403, and 500) deployed. And it has been really effective, maybe too effective. You see, without any special configuration, Symfony is set up in a way where ALL http errors display your error page.

In our case, this means that each request of each IP every second was redirected to php, which spun up Symfony, which returned our custom 404 page, with all the fancy styling, assets and database requests needed.

It was at this point where I physically face-palmed. Each bot request was put through the unnecessary resource intensive 404 request. Okay, this one’s on me… Now what do we do?

Too early for fail2ban

After some web searching, I figured it might be a good idea to implement some anti-bot protection. fail2ban came into my mind and I’ve read up some documentation and guides on how to deploy it in our Nginx setup. Well, remember when I said it was early in the morning? While I tried to setup both fail2ban and ufw with the necessary configurations, I ultimately wasn’t confident enough with it. Tests of the config ended up broken, for a brief moment ANY requests were blocked. So I decided to scrap this plan for now and come back later that day when I gained enough knowledge.

For now, we needed an interim solution. One that is going to be permanent, as usual. So I thought: What if I can add some sort of early return, so those requests immediately fail instead of launching a php request?

The honeypot solution

When studying the logs, I noticed a pattern: Every IP tries to request /wp-admin or /wp-content at least once in it’s attempts to get a compromised plugin/theme with a hidden shell script. There are other common files it tries to request as well (such as .env).

The first course of action was to add these few lines to our nginx config:

location ~* /(wp-login|wp-admin|xmlrpc\.php|\.env|\.git|\.htaccess|admin|setup\.php|config\.php) {

return 444;

}This alone will already help a lot. Every request to a known path that we don’t have (Wordpress) or want to expose (.env file), we skip everything and return 444 - Connection Closed Without Response right away. HTTP 444 is not standardized and mostly used in nginx setups, but it is the perfect error code for when you want to signal nginx that the request is bad and should be blocked.

But this alone was not enough, I wanted to also rate-limit bots HARD for even daring to request these paths.

limit_req_zone $binary_remote_addr zone=bad_bots:10m rate=1r/m;

location ~* /(wp-login|wp-admin|xmlrpc\.php|\.env|\.git|\.htaccess|admin|setup\.php|config\.php) {

limit_req zone=bad_bots burst=3 nodelay;

return 444;

}Now, this is a classic honeypot. Whenever the bots hit any of these paths for a few times, it will throw them into the bad_bots zone and rate-limit them to 1 request per minute.

This should be enough to stop their request pattern while also staying in the logs so we can keep an eye on it for more serious measures.

There is one more thing I added to piss off those bots a bit more. If you look at the screenshot of the logs above again, you’ll see that some requests come in with spoofed legitimate user agents. A lot of them also don’t set one at all (or rather, they set - as their user agent).

if ($http_user_agent = "-") {

return 444;

}If your user agent is -, you don’t get anything.

Results & conclusion

In the end, this interim issue works well enough for the morning until I have some time after todays work to review the situation properly and add better counter-measures. Traffic dropped from hundreds of request per second to 5 every few minutes, which all get hit by the rate-limiting.

The website was available again and htop went from 130% usage to 20-30%.